Il-Kyu Shin1, Cengiz Öztireli2, Hyeon-Joong Kim1,3, Thabo Beeler4, Markus Gross2,4, Soo-Mi Choi1

1Sejong University, Seoul, Korea 2ETH Zürich, Zürich, Switzerland

33D Systems, Inc, Seoul, Korea 4Disney Research Zürich, Zürich, Switzerland

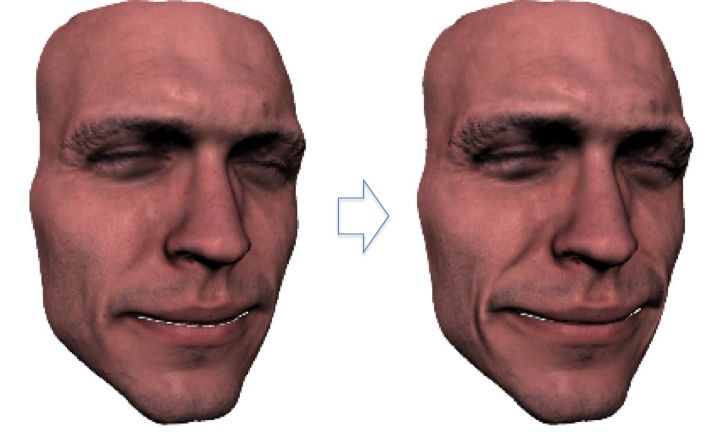

Creating realistic models and animations of human faces with adequate level of detail is very challenging due to the high complexity of facial deformations and the sensitivity of the human visual system to human faces. One way to overcome this challenge is using face scans captured from real facial performances. However, capturing the required level of detail is only possible with high-end systems in controlled environments. Hence, a large body of work is devoted to enhancing already captured or modeled low resolution faces with details extracted from high fidelity facial scans. Details due to expression wrinkles are of particular importance for realistic facial animations. In this paper, we propose a new method to extract and transfer expression wrinkles from a high resolution example face model to a target model for enhancing the realism of facial animations. We define multi-scale maps that capture the essential details due to expression wrinkles on given example face models, and use these maps to transfer the details to a target face. The maps are interpolated in time to generate enhanced facial animations. We carefully design the detail maps such that transfer and interpolation can be performed very efficiently using linear methods.

Links:

PDF Project page